“Autonomous systems” is a term that is frequently used (and often misused) to describe systems that – without manual intervention – can change their behavior in response to unanticipated events during operation [1]. This article introduces a more comprehensive definition. It is a work in progress and is published here as an opinion piece to encourage discussion.

Thomas Gamer ABB Corporate Research, Ladenburg, Germany, thomas.gamer@de.abb.com; Alf Isaksson ABB Corporate Research, Västerås, Sweden, alf.isaksson@se.abb.com

There is nothing new about a system that reacts to changing inputs in realtime. Cars, for example, already feature a considerable amount of low-level automation, such as the electronic stability control (ESC) or anti-lock braking system (ABS). Although the algorithms may act on myriad inputs and attain considerable complexity, the input data is highly structured and the range of possible actions is limited. In contrast, a self-driving car must deal with inputs that are considerably less structured and that call for a greater range of reactions. The algorithm needs to react to all sorts of vehicles it may encounter, as well as pedestrians, road geometries, weather conditions, erratic behavior of others, and any number of random objects and events that the programmers did not necessarily anticipate. Conventional automation systems enable low-level processes to run without human intervention under normal conditions. Human decisions are still required for more complex tasks. Making automation systems more autonomous is about progressively handing over more and more of these tasks to the system.

Achieving an autonomous system

Many of the inputs required for increased autonomy are already available digitally. These include the sensor and process data of classical automation, but also inputs from numerous other sources including surveillance cameras, weather and market data. Artificial intelligence (AI) is a valuable technology for processing this data. There can be a tendency to confuse AI with autonomous systems. AI is a technological means through which a specific level of autonomy can be achieved. Autonomy is the target that AI can help achieve.

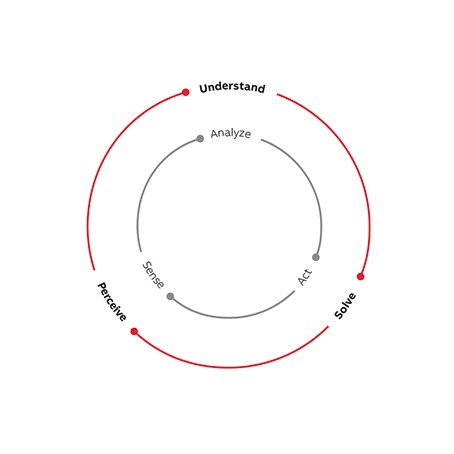

An automation system typically performs precisely defined instructions within a limited scope of operation. A classical control loop can be broken down into the phases of sense, analyze and act. For example, a motor is running too fast (sense), the controller decides to reduce the speed (analyze) and reduces the current to the motor (act). An autonomous system feedback loop adds another shell, applying the same principle but on a more complex level, encompassing also with what is not known or foreseen. A self-driving vehicle identifies an obstruction (perceive), recognizes that a potentially dangerous situation may arise (understand) and takes corrective action by modifying the speed and trajectory of the vehicle (solve) →1.

Levels of autonomy

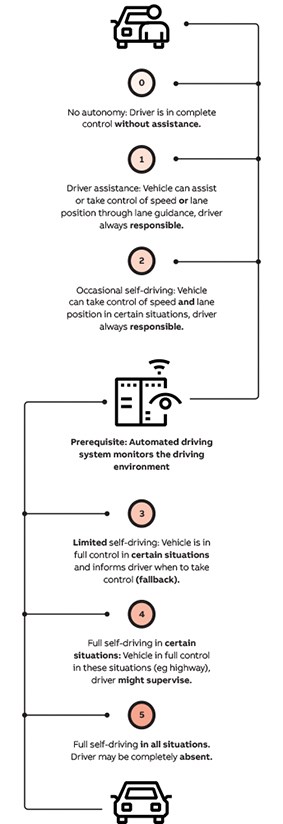

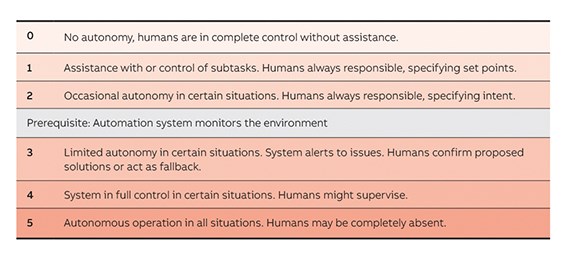

In order to define objectives in the transition to autonomous systems, it is important to establish a taxonomy so that automation providers and customers can define where they are and where they want to be in the short, medium and long-term. This article proposes a taxonomy of autonomy with six levels, inspired by definitions from the automotive industry →2,3. It is mainly based on two dimensions: scope of automated task and role of the human. The taxonomy begins with no autonomy (Level 0, extensive low-level automation may still be in place at this level) and rises to full autonomous operation (level 5), in which all decision making and actuation is done by the system.

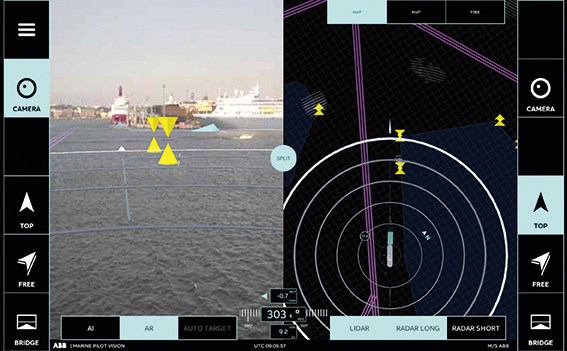

On Level 1, systems provide operational assistance by decision support or remote assistance. Examples include software that helps localize underground mine vehicles, or provides situational awareness for ships by additional sensing such as LIDAR and radar →4.

Level 2 edges into occasional autonomy in certain situations. Here, the automation system takes control in certain situations when and as requested by a human operator, for limited periods of time. People are still heavily involved, monitoring the state of operation and specifying the targets for limited control situations. One example is an autopilot for ships that on request takes over speed and navigation control following a pre-defined route, but with an active captain still being fully responsible.

On Level 3, automated systems take control in certain situations. This can also be called "limited autonomy". People "sign off," so to speak, confirming proposed solutions or acting as fallbacks. A prerequisite is a complete and automated monitoring of the environment. An example would be autonomous drilling followed by autonomous charging of explosives in an underground mine →5. In such a setup, the (remote) operator can still be alerted in exceptional situations, and can take over or confirm a suggested resolution strategy.

On Level 4, the system is in full control in certain situations and learns from its past actions, eg to be able to better predict and resolve issues by itself. An example for such a situation is the autonomous docking of a ship, with the captain at most having a supervisory role.

At the top level of this taxonomy is Level 5. Full autonomous operation occurs in all situations. No user interaction is required and humans may be completely absent. Today, this is aspirational, but for instance an electric self-driving mining vehicle for full autonomous loading of the ore would carry major advantages of safety and productivity.

There is an important demarcation between levels 0, 1 and 2 on the one hand and levels 3, 4 and 5 on the other hand. In the former group there is some capability for autonomous actions, but these are limited in scope and the human essentially remains in active control at all times. The higher three levels are different because the human is in a passive role at most.

The legal issues raised when problems occur if a system is in control have not yet been fully addressed. There are parallels here when it comes to accidents involving self-driving cars. The legal and public acceptance situation is still developing.

Autonomy is initially attracting attention in applications similar to self-driving cars. In ABB terms this means ships, mobile robots, harbor cranes as well as mining vehicles and machines. ABB expects autonomy to enter also other traditional ABB domains such as process industries, power grids and buildings.

Work is in progress to define detailed autonomy levels for all of the above applications based on the presented taxonomy. Besides being a taxonomical tool, such detailed autonomy levels can help companies recognize at which level they presently are, at which one they want to be, and where the challenges of making the transition lie. The desired level will be influenced by individual acceptability of solutions, including aspects of risk, benefits, and liability, and the maturity of the relevant technology.

References

[1] David P. Watson, David H. Scheidt: Autonomous Systems, Johns Hopkins Applied Physics Laboratory, Technical Digest, vol. 26, no. 4, 2005.