Ports are busy, and getting busier. Merchant vessels are getting bigger. According to figures published by the United Nations Conference on Trade and Development (UNCTAD), between 2020 and 2021, median vessel turnaround time in port increased by 14 percent [1] while the average annual growth rate of cargo shipping is estimated at around 2.1 percent between 2023 and 2027 [2]. An ABB research program helps navigate the uncertainties.

Stefano Maranò, Deran Maas, Bruno Arsenali ABB Corporate Research Dättwil, Switzerland, stefano.marano@ch.abb.com, deran.maas@ch.abb.com, bruno.arsenali@ch.abb.com; Jukka Peltola, Kalevi Tervo ABB Marine & Ports Helsinki, Finland, jukka.p.peltola@fi.abb.com, kalevi.tervo@fi.abb.com

Congested ports and crowded shipping lanes bring higher risks to vessels and added pressure on crews. Augmenting the crew’s skills with technology brings safety benefits both to commercial vessels applying the technology and pleasure crafts sailing the same waters.

Modern navigation is heavily reliant on human perception. However, human senses are sub-optimal for slow, continuous, or wide-angle observations [3]. Research shows that despite their excellent ability to handle uncertainty, solve problems with creativity, and apply their knowledge and experience in making judgements, mortals are, nevertheless, fallible. A significant percentage of marine accidents are, to some extent, caused by human lapses[4]. Causes include crew fatigue, which can be improved by adopting new technology. To enable ships to see and monitor their surroundings – a pre-requisite for remote control and autonomous operations – several technologies are employed together to deliver a viable and trustworthy solution. Such solutions further enhance human experience and support crews in their safe vessel handling and accident avoidance.

One central navigational task is the lookout: the continuosly observing the surroundings with the purpose of early detection of any hazard to navigation. Human lookout is done by the crew, aided by binoculars. Other technologies contributing to safe navigation include Automatic Identification System (AIS) and marine radar. The current practice has some evident limitations. The lookout on duty may miss a nearby vessel due to the challenges of detecting slow and gradual change, or by having to focus on multiple targets simultaneously or for an extended time. Small craft may not be equipped with an AIS and may also be missed by the radar due to a low radar signature. Moreover, bigger vessel’s radars typically have blind areas in close-range around the vessel since the radar is meant to detect targets far and early rather than close and last-minute. Radar is most often positioned on top of the bridge and has limited vertical field of view (FOV), so it will inevitably have a minimum range that is typically several hundreds of meters. History shows us that to increase safety, we need to look at things differently. As the author Wayne Dyer wrote “If you change the way you look at things, the things you look at change [5] In →01b a smaller vessel is depicted docked alongside a larger vessel and is therefore difficult to distinguish with a marine radar, but by changing the way we look at the scene, the things we are looking at have changed: the vision-based system is able to detect both the small and the large vessels.

01a Many targets, some small and not transmitting AIS, are moving ahead of the navigating vessel.

01b Ahead, a small support vessel is docked on the side of a larger navy vessel.

01c Small and very fast recreational jet skis are unpredictably maneuvering in the vicinity of the navigating vessel.

01 Examples of images recorded by the lookout camera installed on Suomenlinna II, a small passenger ferry connecting Helsinki to Suomenlinna Island.

Leveraging machine perception and automation systems can change the way we look at things, enabling safer, more efficient maritime operations. A system of cameras and machine perception algorithms can fill current gaps by providing continuous, relentless, lookout, water clearance functionalities (WCF), detect small obstacles and cover blind zones not visible from the bridge.

An important task vital to navigation is accurate determination of water clearance: calculating the distances from the hull outline of the ego-ship to obstacles. This is particularly relevant during maneuvering in the harbor or navigating in confined waters. This could be done by relying on a global navigation satellite system (GNSS) and accurate charts. However, loss of GNSS would leave the ship without vital information and, depending on the frequency and precision of survey data, charts can vary greatly in their accuracy. Restricted bridge visibility requires additional crew during docking and tug operations; the bridge crew relies on subjective data about size and distance of obstacles, communicated via walkie-talkie.

Monocular-vision system

Today, novel algorithms empowered by modern hardware allow machines to process visual input and to perform complex perception tasks. State-of-the-art deep learning methods rely on neural networks with millions of parameters. The architecture of neural networks is tailored to the specific detection task. Modern hardware enables training of such huge neural networks. The resulting model can fulfil perception tasks including object detection and semantic segmentation. Machine learning methods are then used in combination with computer vision and signal processing techniques to bring value to the end user and support crew safety.

This research focuses on a monocular-vision system, ie, a single-camera system. This technology has been chosen in order to focus on bringing additional value to existing onboard cameras. Often a single camera is already installed and ABB’s technology can utilize this. This simple and relatively inexpensive hardware has the benefit of being easily understood by humans, as well as being usable by computers. The systems presented here are comprised of multiple components, and one of the main challenges was to ensure that all of them operate in real time. This kind of functionality requires careful management of data flows from multiple sensors and balancing of algorithm execution between the main CPU and graphical processing units.

Convolutional neural networks

Object detection and semantic segmentation technologies are related to computer vision and image processing. The cutting-edge of these technologies are models based on convolutional neural networks. These networks are based on many convolution kernels that slide along the input feature maps and provide the output feature maps. As these kernels have many parameters that need to be tuned, a large quantity of images are required. To address this challenge, ABB collected and annotated tens of thousands of domain-specific images [PAT-1]. To improve the generalization, the images were recorded in different conditions and locations, both onboard and offboard. The vessel from which the onboard recordings are made and where the electronic lookout and WCF are operating is referred to as the ‘ego-vessel’. When possible, a proprietary software was used to record images. The time of the day, the location and speed of the ego-vessel were used to start and to stop the recording process.

The goal of object detection is to detect all instances of objects from one or more classes. When it comes to marine applications, object detection is often used to detect different types of vessels and marine objects. They include but are not limited to sailing vessels, passenger vessels, and cargo vessels. Furthermore, sailing vessels may include engine and non-engine powered vessels. Differentiation between them is important for collision avoidance applications since engine and non-engine powered vessels behave differently and should be treated differently in line with the COLREGs rules.1)

Semantic segmentation is of vital importance for WCF as it enables the assigning of a class label for each pixel in the image. For example, this technology allows to the differentiation between pixels that belong to the following classes: vessel, water or land. Segmentation of water is used to estimate the water clearance, while joint segmentation of vessels and water is used in combination with object detection for vessel-water interface localization [PAT-2]. This interface is used to estimate locations of target vessels in the world coordinate system.

Camera calibration

A monocular imaging system is used to estimate locations of target vessels in a world coordinate system. As this system is calibrated with respect to the sea plane, image points on the vessel-water interface are back-projected from the camera to the sea plane. The back-projected points define the location of each target vessel. In this step, it is important that the camera is calibrated. Camera calibration entails both intrinsic and extrinsic parameters. Intrinsic parameters are usually calibrated in a lab. Extrinsic parameters need to be calibrated after installing the camera onboard, during commissioning of the system [PAT-4]. Any ship motions during operation need to be accounted for to ensure accurate back-projection. This may be done by utilization of an inertial measurement unit (IMU) or with a vision-based attitude estimation algorithm [PAT-5].

The locations of the target vessels are fed to a multiple target tracker. Each target is tracked with a filter able to capture target dynamics and provide estimates of target position, speed over ground (SOG), and course over ground (COG). Such estimated quantities can be used, for example, to determine the closest point of approach (CPA) and time to closest point of approach (TCPA) of target vessels in relation to the ego-vessel.

The marine environment poses some unique challenges to a digital vision system. Atmospheric conditions including harsh light conditions, dense fog, and heavy rain limit the capabilities of cameras. Other sensing technologies including non-visible wavelengths could be chosen in such scenarios in the future.

Electronic lookout

The images at →01 are from the Suomenlinna II, a small passenger ferry connecting Helsinki, Finland, to Suomenlinna Island. The camera is installed on the vessel at a height of approximately 10 meters above the water line and has a horizontal field-of-view of approximately 60 degrees. The images exemplify some of the situations where an electronic lookout can bring significant value: in →01a many targets are moving ahead of the own vessel, several of those are small targets which are not transmitting AIS and may be undetected to radar; →01b ahead a small support vessel is docked on the side of a larger navy vessel. A radar would hardly be able to distinguish the two targets, but the vision-based system is able to do so. On the left a ferry ship is detected despite being partly occluded by an island; →01c small and very fast recreational jet skis are maneuvering unpredictably in the vicinity of the ego-vessel, electronic lookout can monitor them continuously.

02 Example of images from MS Finlandia while approaching Helsinki, in which a vessel navigates from the port to the starboard side of the ego-vessel.

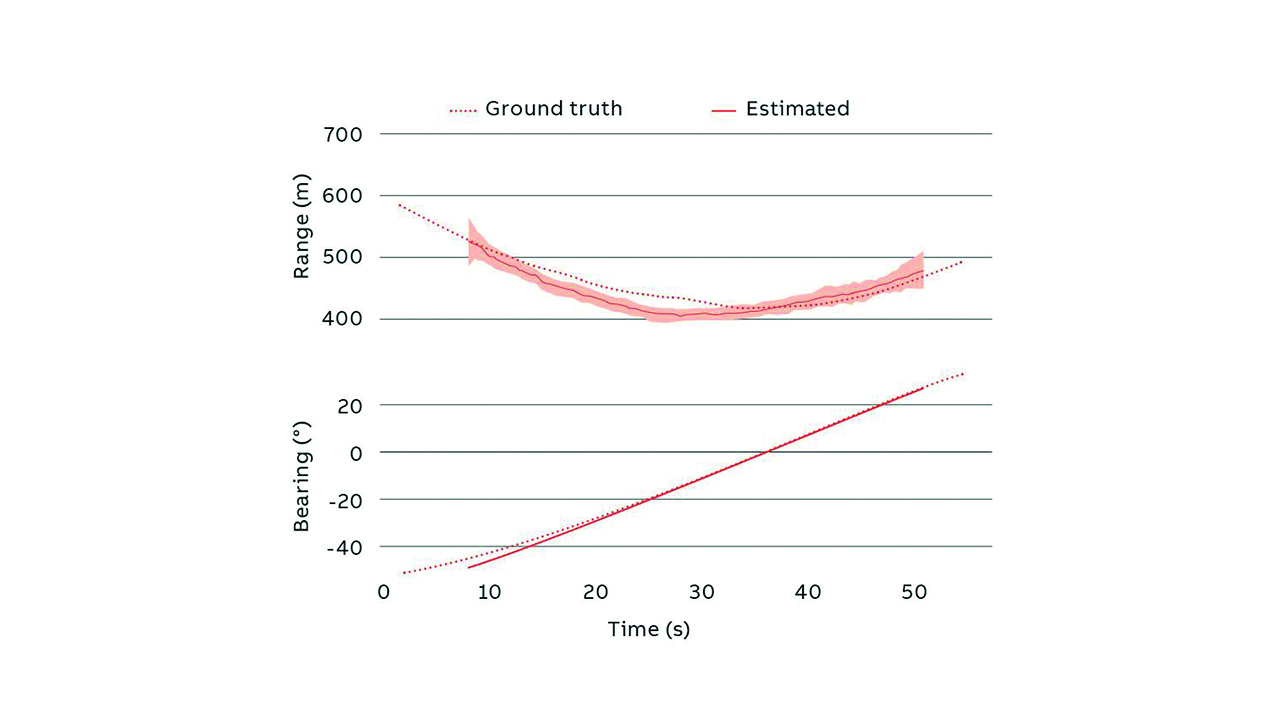

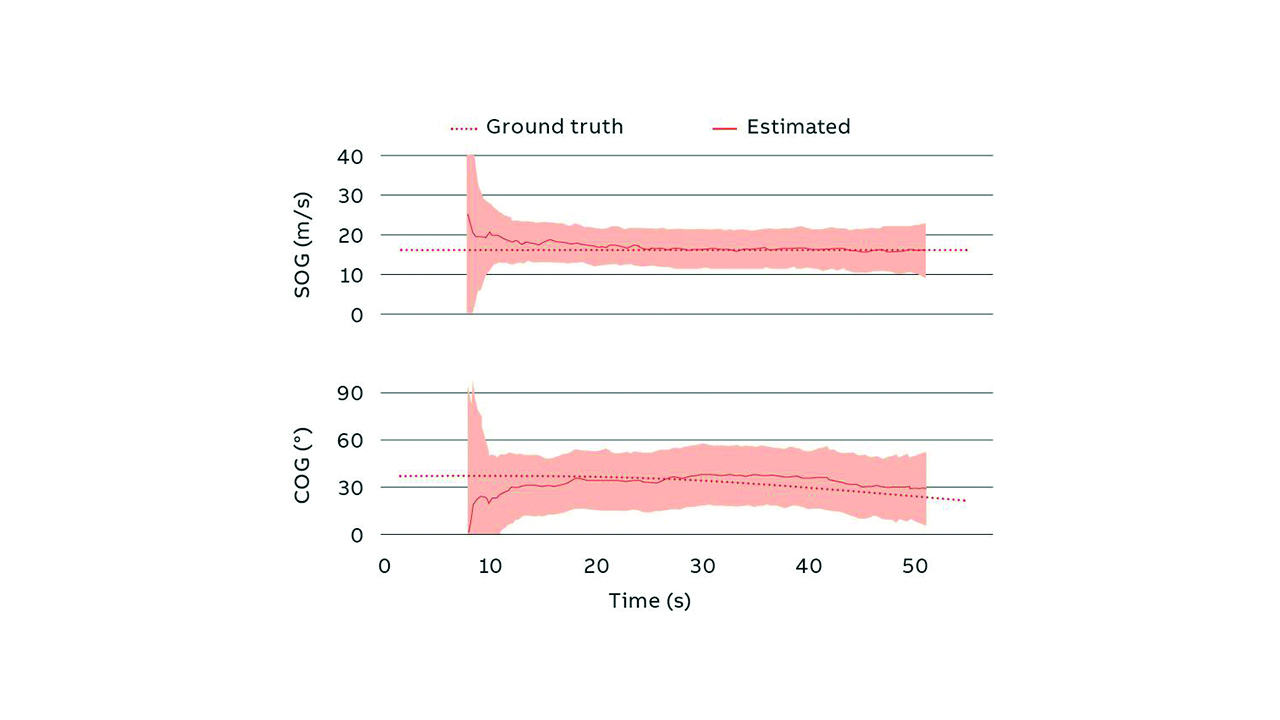

At →02, depicted are a sequence of images from the lookout camera of Merchant Ship (MS) Finlandia, a ROPAX (roll on – roll off passenger vessel) that operates between Tallinn, Estonia and Helsinki, Finland. The camera is installed at a height of approximately 30 meters and has a horizontal field-of-view of approximately 115 degrees. In the sequence, a motorboat navigates from the port to the starboard side of the ego-vessel. The motorboat is detected. Bounding boxes depict the corresponding detections. Furthermore, the detected vessel is tracked by the vision system while within the field of view. The resulting track is compared to the corresponding AIS track. The comparison of range and bearing can be seen at →03, while →04 shows the comparison for SOG and COG. In both →03 and →04 the shaded area represents the uncertainty from the tracking filter (one standard deviation). The distance of the target vessel in this example ranges from approximately 400 m to approximately 600 m, while the SOG of the target vessel is approximately 15 m/s. The error in the estimated range is below 10 percent. SOG and COG estimates provide the input needed for CPA and TCPA calculations.

Water clearance

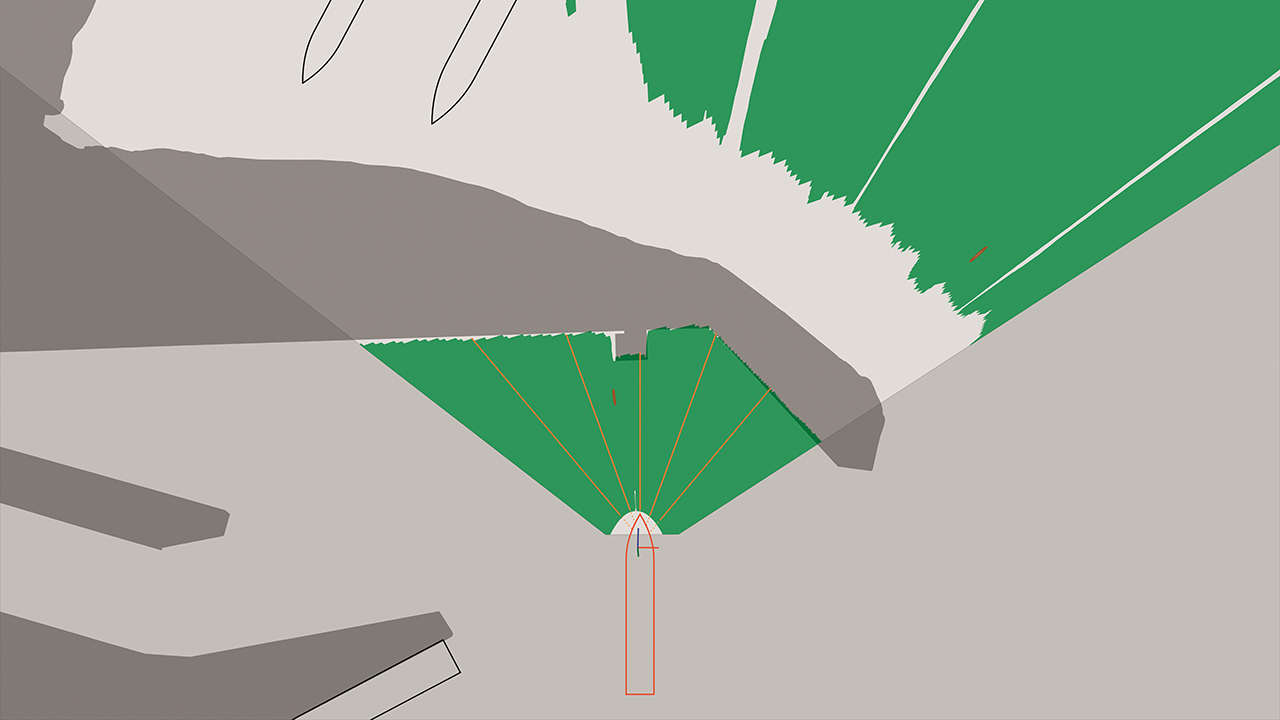

A sequence of images captured from the lookout camera of MS Finlandia while leaving Tallinn harbor are shown at →05. The ship is docked bow first and while exiting the harbor, she reverses and makes a 180 degree turn within the harbor. In the sequence of figures, the water is depicted in green, corresponding to the output of the segmentation network. Orange lines and their respective labels show the clearance between the hull and the first obstacle along pre-defined directions. →06 shows a map of the water overlaid with the harbor structures. Such visual and numerical information about water clearance can provide valuable input to the crew during maneuvering or it can be used to further improve safety of autonomous operations.

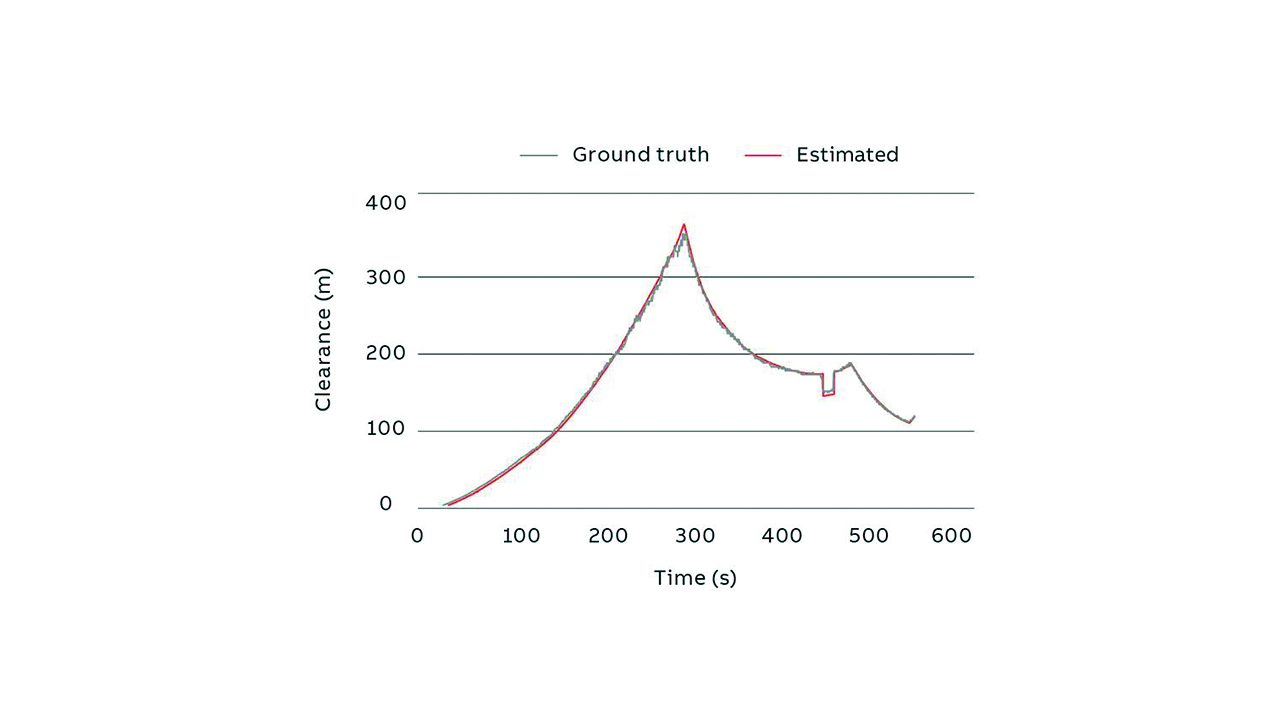

The estimated clearance along the centerline is compared with the ground truth in →07. Ground truth is computed from charts and GPS-based ego-vessel position. The relative error is below 5 percent, accepted as more than sufficient in many applications. The small discrepancy, around 300 seconds, is due to a loading ramp that is not present in the charts used for the evaluation. This shows an advantage of this technology compared to relying on charts, which may be outdated at the time of use. Another advantage lies in the reduced cost of this technology compared to a LIDAR-based alternative.

05a MS Finlandia about to cast off.

05b MS Finlandia just after casting off.

05c MS Finlandia commencing turn to starboard, within one of Tallinn’s ports, towards exit.

05 A sequence taken from the lookout camera of MS Finlandia while leaving one of Tallinn’s ports. The water clearance map and the directional clearances are computed in real time from the camera stream.

Marine Pilot Vision

The research presented in this article is central to the development of more autonomous solutions for vessels. ABB Ability™ Marine Pilot Vision is part of the ABB autonomous solutions portfolio. It provides enhanced situational awareness by combining information from a range of sensors and other information sources for both the human operator and for autonomous control functions.

ABB Ability™ Marine Pilot Vision can provide a solution that does not rely on any external infrastructure. Image stream from cameras can be analyzed, and the map of the water clearance around the vessel can be calculated. Clearance from the hull to the closest quay or floating obstacle can be defined [PAT-3].

Vision-based water clearance is used for docking assistance and harbor maneuvering, providing clearance measurements from desired points on the hull’s waterline towards desired directions. Docking cameras allow monitoring of areas not visible from the bridge. Water clearances can be visualized in Marine Pilot Vision’s Chart and Camera Views. Vision-based vessel detection is used to support other target sensing functions (eg, AIS and radar), to extend detection coverage to vessels without AIS, vessels with low radar signature and to sectors which the human user is not observing constantly. Detections can be used for Lookout Assistance and for Target Tracking in short ranges. Localized detections are visualized as part of Lookout Assistance in Marine Pilot Vision’s Chart and Camera Views.

The way ahead

The first functionalities resulting from this research are already being demonstrated in pilot projects on ferries in Scandinavia and tugs in the US. These projects allow a great deal of data to be collected and learning to be made to further the development of these systems, enabling a wide-scale commercial use of such products. There is a clear interest of progressive vessel operators to support the day-to-day operations of their crew with situational awareness systems. Yet, there is still a way to go from a regulatory standpoint before such technology can be viewed as a tool that can be considered as a full crew member.2) The research will enable the potential of vision-based solutions, in this case a monocular-vison system, and their benefits when integrated into the suite of marine safety systems. In addition to bolstering marine safety, such vision-based solutions are an enabler for increasing future autonomy in the shipping industry.

References

[1] United Nations Conference on Trade and Development. (2022). (rep.). Review of Maritime Transport (p. 62). Geneva.

[2] United Nations Conference on Trade and Development. (2022). (rep.). Review of Maritime Transport (p. xvii). Geneva.

[3] K. Tervo, “Navigating the future”, ABB Review 02/2022, pp. 10 – 17.

[4] R. Hamann & P. C. Sames (2022) Updated and expanded casualty analysis of container vessels, Ship Technology Research, DOI: 10.1080/09377255.2022.2106218

[5] D. W. Dyer, Power of Intention: Change the Way You Look at Things and the Things You Look at Will Change. Hay House Uk Ltd, 2004.

[6] https://one-sea.org/

[PAT-1] “Method for labelling a water surface within an image, method for providing a training dataset for training, validating, and/or testing a machine learning algorithm, machine learning algorithm for detecting a water surface in an image, and water surface detection system”, European Patent Application no. EP22180133.5, Filed June 21, 2022.

[PAT-2] “Method for determining a vessel-water interface, and method and system for determining a positional relationship between an ego vessel and a target vessel”, European Patent Application no. EP22180131.9, Filed June 21, 2022.

[PAT-3] “Method and system for determining a region of water clearance of a water”, European Patent Application no. EP22212496.8, Filed Dec. 9, 2022.

[PAT-4] “Method and a system for calibrating a camera”, European Patent Application no. EP22200130.7, Filed Oct. 6, 2022.

[PAT-5] “Method and system for determining a precise value of at least one pose parameter of an ego vessel”, European Patent Application no. EP23158743.7, Filed Feb. 27, 2023.

Footnote

1) COLREGs stands for the Convention on the International Regulations for Preventing Collisions at Sea; it was adopted in 1972 and entered force on July 15, 1977.

2) ABB and the One Sea partners use the expertise they gain through the development of these technologies to identify regulatory requirements of electronic lookouts and other situational awareness systems towards the International Maritime Organisation (IMO). This supports the development of the regulatory framework that will be released in 2028. One Sea Association is a non-profit global alliance of leading commercial manufacturers, integrators and operators of maritime technology, digital solutions and automated and autonomous systems. The association engages in the development of the international legal framework and participates in the standardization work [6].